When was the last time you decided to update the dependencies of one of your projects? And not because you needed a new feature, just to get the latest security patches for your project which might be running on the public internet? Well yes, I got the same problem: never. I didn't update most dependencies of my own software until sometime earlier last year.

My TL;DR today is that the setup was very easy, and I can sleep better now, as I know I will receive a notification when one of my applications is vulnerable. The two systems I have used to achieve this are Dependabot for GitHub projects and renovate for GitLab projects in my private instance of GitLab.

These tools do work with a variety of different package managers, e.g. go, npm, pip and even docker.

Features

The main feature of automated updates is rather obvious: you get new upstream (security) fixes automatically. And you get them via a new PR/MR in your repository, so there is no need to keep track of used dependencies and manually subscribe to new releases. What kind of updates you get depends on your configuration and the locked version in the lock file of your package manager. Normally I tend to enable all updates as most of the time I got at least some tests to cover the main business logic of my app (or even some end-to-end tests), so I can be sure it will work with a new version of a dependency.

Requirements

One thing that is needed to use these systems are tests. Without tests, you can never be sure if a package update will result in broken features. This is especially the case for frontend applications which tend to rely on a lot of external packages (mostly true for the JS/TS ecosystem in general). In this case unit tests are often not enough, as you also want to update e.g. your ORM or database driver and these are not covered by unit tests.

If you got enough trusts in your tests you can even automatically merge MRs/PRs and ensure that everything is still working as expected. In my opinion this is the goal you should aim for.

The process

Let's go over how this whole setup works with GitHub's Dependabot. At first, you have to enable Dependabot for your repository. This is as easy as adding the following file to your repository as .github/dependabot.yaml:

version: 2

updates:

- package-ecosystem: "gomod"

directory: "/"

schedule:

interval: "daily"

Then you have to wait for dependency updates or a vulnerability.

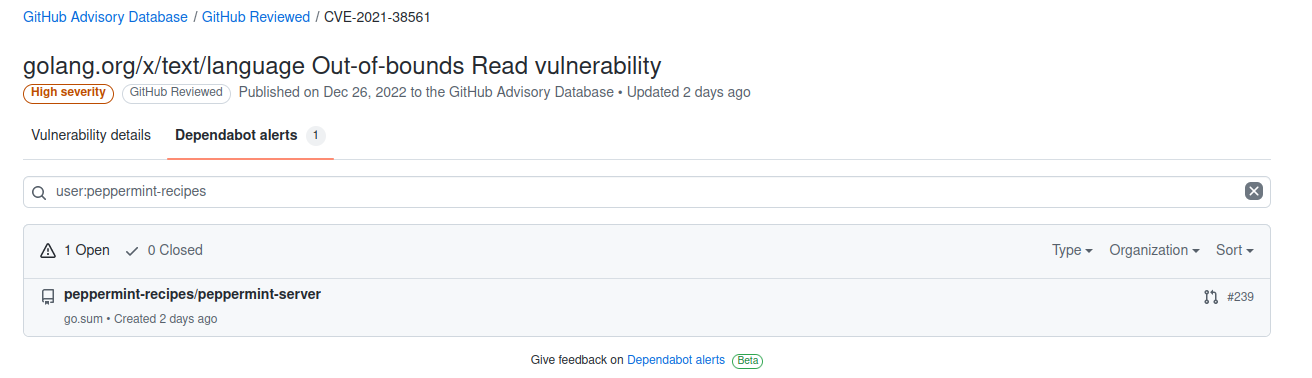

Once you got a vulnerable dependency in one of your projects you will be notified via mail and get a Dependabot alert.

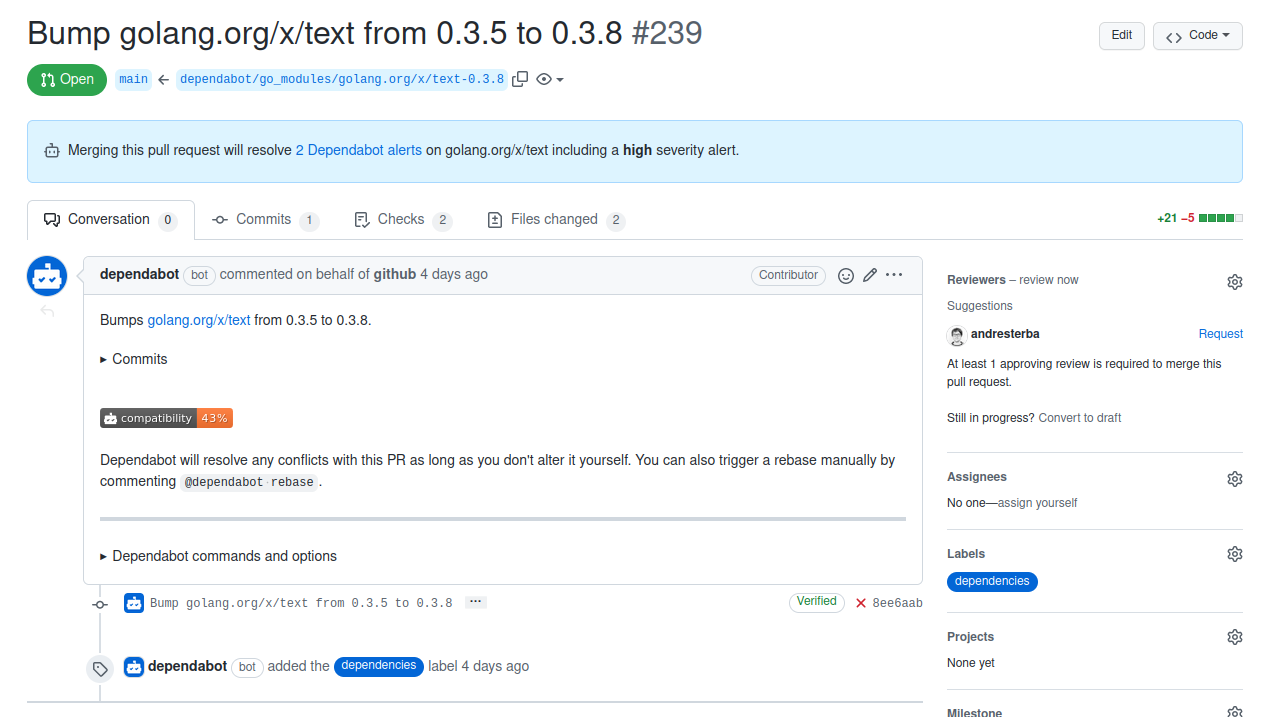

Dependabot will then create a pull request in the affected project.

All you have to do is to merge this PR, once you ensured it doesn't break anything of course.

Risks

All this sounds just too good to be true, so I won't hide the fact that it also opens up new attack vectors.

The first one is dependency confusion, which (in short) can be used to replace your private dependencies by malware infected ones. This is only possible because some dependency management systems (like npm or pip) rely on public services, that host these packages and they will look there first, even if you provided a private package registry. 1

Another risk is that you pull malicious code in your repository, because an at the time of creating your project trustworthy author abandoned his project and gave it to someone with bad intentions or the project got attacked and some attacker added the malicious code to the code. 2

There are multiple more examples and attack vector on software supply chains documented on the internet (for example 3 4 5).

Another risk (well the risk you always have with software) is introducing new bugs, as new features getting added. You can sometimes mitigate this by only relying on security updates, but eventually you have to migrate to a newer and support version of a dependency.

When to add a new dependency?

This is the question you should always ask yourself before adding a new dependency to a project, especially when you want to maintain it for a long time. The answer is coupled to the ecosystem you use as in how rich the standard library of the language is and on how much work you want to do yourself (in re-inventing the wheel). If you are using a language with a rich standard library like Go and are willing to write some components like a more advanced HTTP server yourself, you can get around most of these problems.

On the other hand you might invest your time in re-inventing components other people already did (and maybe better than you did it in terms of security or performance). One should weigh up which decision is the right one here.

The future of our software supply chains

Your dependencies of a project are your software supply chain. The name suggests that each of the components are interconnected and you must ensure at least some sort of integrity of these packages. I became aware of the topic through some scientific publications. 6 7 It is a topic of research and other languages or build systems already provide checksums or other mechanisms to mitigate or minimize risks of being a consumer of other software.

Conclusion

In my opinion tools like Dependabot or renovate are an easy way to keep your software up to date and secure. They are also only consumers in the whole supply chain and therefore can't mitigate the earlier mentioned risks alone, but they are an essential part when developing software. https://medium.com/@alex.birsan/dependency-confusion-4a5d60fec610 ↩ https://thehackernews.com/2021/07/malicious-npm-package-caught-stealing.html ↩ https://secureteam.co.uk/news/vulnerabilities/javascript-supply-chain-attack-hits-millions-of-users/ ↩ https://blog.jscrambler.com/how-your-code-dependencies-expose-you-to-web-supply-chain-attacks ↩ https://www.bleepingcomputer.com/news/security/dev-corrupts-npm-libs-colors-and-faker-breaking-thousands-of-apps/ ↩ https://arxiv.org/abs/2204.04008 ↩ https://dl.acm.org/doi/abs/10.1145/3510457.3513044 ↩